UEyes: Understanding Visual Saliency across User Interface Types

Abstract

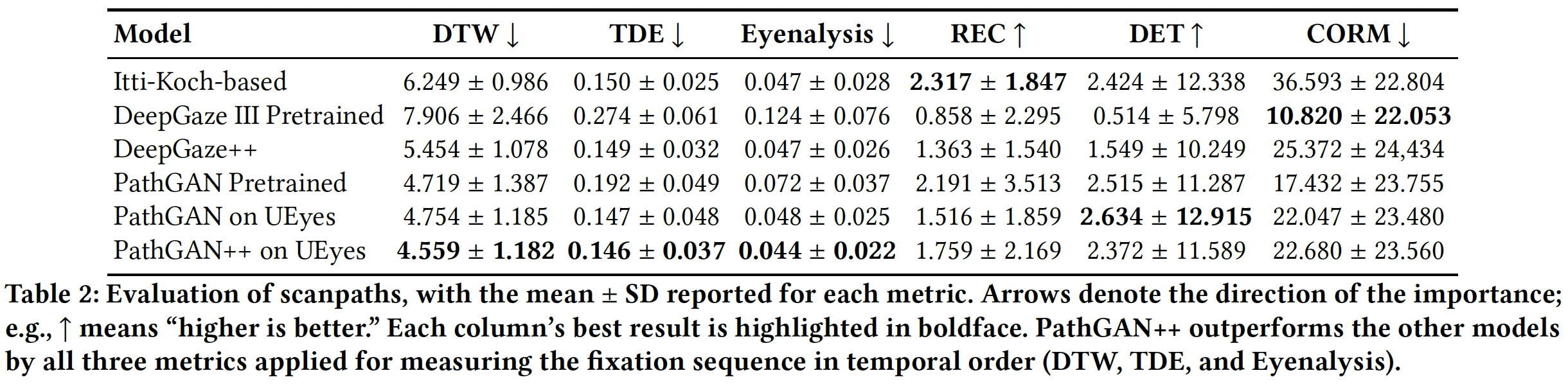

While user interfaces (UIs) display elements such as images and text in a grid-based layout, UI types differ significantly in the number of elements and how they are displayed. For example, webpage designs rely heavily on images and text, whereas desktop UIs tend to feature numerous small images. To examine how such differences affect the way users look at UIs, we collected and analyzed a large eye-tracking-based dataset, UEyes (62 participants and 1,980 UI screenshots), covering four major UI types: webpage, desktop UI, mobile UI, and poster. We analyze its differences in biases related to such factors as color, location, and gaze direction. We also compare state-of-the-art predictive models and propose improvements for better capturing typical tendencies across UI types. Both the dataset and the models are publicly available.

Resources and Downloads

Correction:

We regenerated Table 2 in the paper after fixing some calibration error in the dataset. Sorry for the inconvenience.

Citation

@inproceedings{jiang2023ueyes,

author = {Jiang, Yue and Leiva, Luis A. and Rezazadegan Tavakoli, Hamed and R. B. Houssel, Paul and Kylm\"{a}l\"{a}, Julia and Oulasvirta, Antti},

title = {UEyes: Understanding Visual Saliency across User Interface Types},

year = {2023},

isbn = {9781450394215},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3544548.3581096},

doi = {10.1145/3544548.3581096},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems},

articleno = {285},

numpages = {21},

keywords = {Computer Vision, Eye Tracking, Deep Learning, Interaction Design, Human Perception and Cognition},

location = {Hamburg, Germany},

series = {CHI '23}

}