Graph4GUI: Graph Neural Networks for Representing Graphical User Interfaces

Abstract

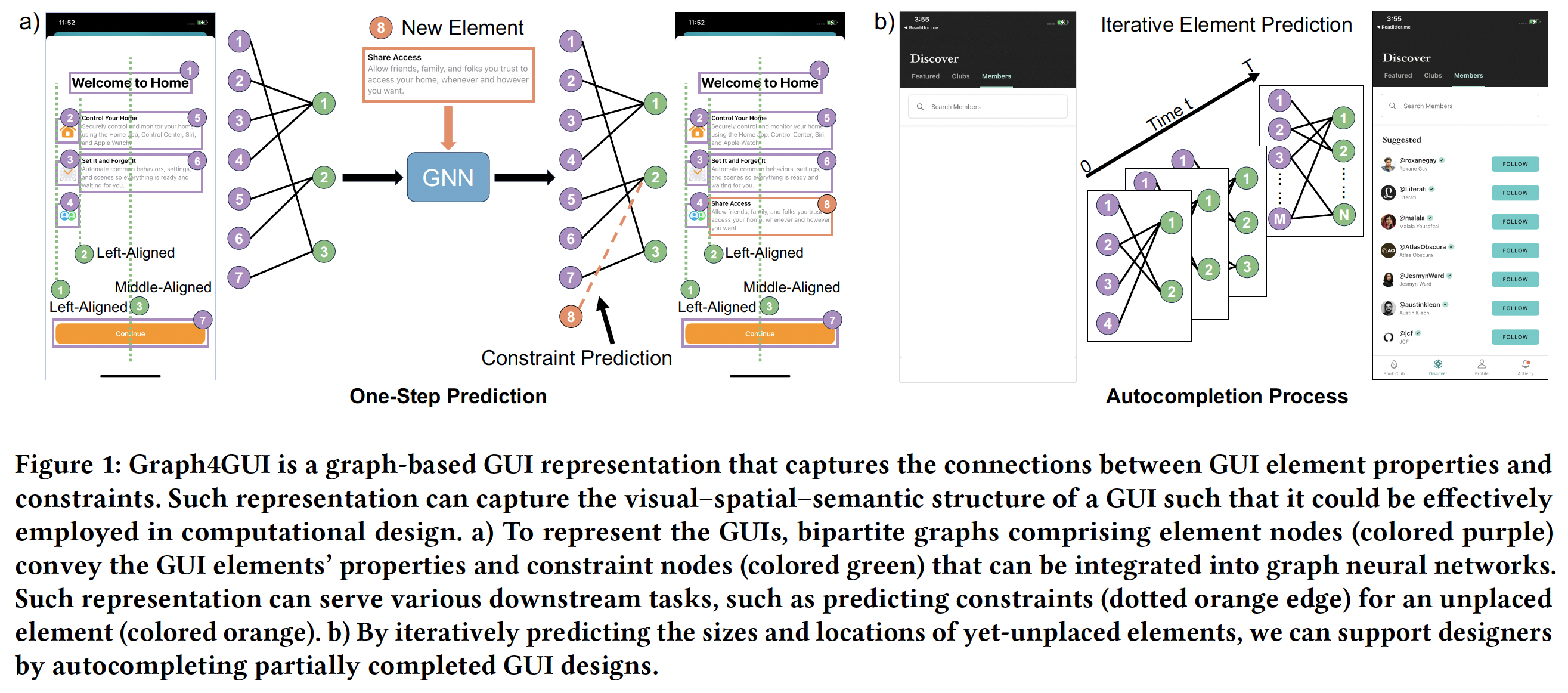

Present-day graphical user interfaces (GUIs) exhibit diverse arrangements of text, graphics, and interactive elements such as buttons and menus, but representations of GUIs have not kept up. They do not encapsulate both semantic and visuo-spatial relationships among elements. To seize machine learning's potential for GUIs more efficiently, Graph4GUI exploits graph neural networks to capture individual elements' properties and their semantic-visuo-spatial constraints in a layout. The learned representation demonstrated its effectiveness in multiple tasks, especially generating designs in a challenging GUI autocompletion task, which involved predicting the positions of remaining unplaced elements in a partially completed GUI. The new model's suggestions showed alignment and visual appeal superior to the baseline method and received higher subjective ratings for preference. Furthermore, we demonstrate the practical benefits and efficiency advantages designers perceive when utilizing our model as an autocompletion plug-in.

Resources and Downloads

Citation

@inproceedings{jiang2024graph4gui,

author = {Jiang, Yue and Zhou, Changkong and Garg, Vikas and Oulasvirta, Antti},

title = {Graph4GUI: Graph Neural Networks for Representing Graphical User Interfaces},

year = {2024},

isbn = {979-8-4007-0330-0/24/05},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3613904.3642822},

doi = {10.1145/3613904.3642822},

booktitle = {Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems},

keywords = {Graphical User Interface, User Interface Representation, Constraint-based Layout, Graph Neural Networks},

location = {Honolulu, USA},

series = {CHI '24}

}